Data file: neural.csv

Artificial neural networks have been around for at least 20 years as of this writing. They consist of a series of “neurons” which take data from input sources or other neurons, combine and simplify that data, and pass the results on to other neurons or output sources. Usually, neurons “weight” the incoming data they receive. A system of neurons forms the neural network, which only becomes effective when the weights applied to the inputs of the neurons become optimized as a whole to solve a particular problem.

The mathematics of what neurons do can vary, but generally they multiply each stream of incoming data by a weight, then sum those products up. A non-linear “activation” or “squashing” function is then applied to the sum, and the result is passed out of the neuron.

Most neural network software products build a system of neurons in a fixed or fairly strict structure. ChaosHunter puts neurons together as the optimizer sees fit to solve the problem. The optimizer also finds the weights. The activation function used in the ChaosHunter is the hyperbolic tangent (tanh). The result of a final neuron produces the output of the neural network. This process is analogous to evolving a brain which then learns from birth, since the evolutionary process “evolves” the brain structure, and then “learns” the appropriate weights to finally solve the problem.

Since the tanh function produces values in the range -1 to 1, it will be best if you limit your constants (which will be chosen for the weights) to about +/– 2. Scaling of both inputs and outputs is appropriate if you are inputting or predicting numbers larger than +/- 1. If you are building a trading model, scaling outputs is not necessary, because you can set your buy/sell thresholds to +/- 1.

The tanh function itself is available as an operator in the Neural category, as is another function often used as an activation function in neural networks – the sigmoid function.

There are three possible neurons, Neuron2, Neuron3, and Neuron4, labeled by the number of inputs each takes (in the formulas these are abbreviated n2, n3, and n4). So Neuron2 takes two inputs, but it also has two other parameters that will become weights. So it computes tanh(weight1*input1 + weight2*input2) where * means multiplication. Usually the weights will be constants, but it is possible that evolution will decide to use another input as a weight. There are no restrictions placed on the optimizer when it builds neural nets.

We have built a neural model using the data from the Polynomial example. The inputs were already in a small range < +-1, so we scaled only the output. The model takes a while to optimize (in this case we can call it evolving and learning) as it finds not only neural structures, but better and better weights. We stopped this model after about 20 minutes because only small changes in the mean squared error were occurring, and the following neural network was produced at that point:

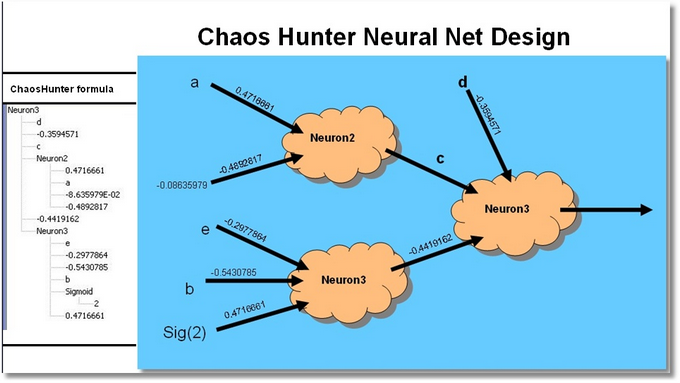

Actual = n3(d, (-0.3594571), c, n2(0.4716661, a, (-8.635979E-02), (-0.4892817)), (-0.4419162), n3(e, (-0.2977864), (-0.5430785), b, sigmoid(2), 0.4716661))

Note that ChaosHunter decided that the connection weight from Neuron2 to the second layer Neuron3 should be one of the inputs variables (C), and that in two other cases inputs should be constants, e.g., Sigmoid (2) and the value -0.08635979. These selections show that ChaosHunter is flexible in building neural networks!

Subject to the following un-scaling (output restoration from internal range):

Actual = 5.223723 + 17.89858 * Actual

As in any model you build, a different random seed can produce entirely different neural structures, which may produce approximately the same results, or may by chance in the evolutionary process, produce much better results.

Note: We have only included the data file for this example. The neural problem has so many possible solutions that we did not want to imply that our model was better than another neural network design. We leave it as an exercise for the user to create another neural model.